Testing the working prototype

In our first round of testing (carried out a couple of weeks ago) we experienced a few technical issues. This meant we couldn't evaluate things as fully as we'd hoped.

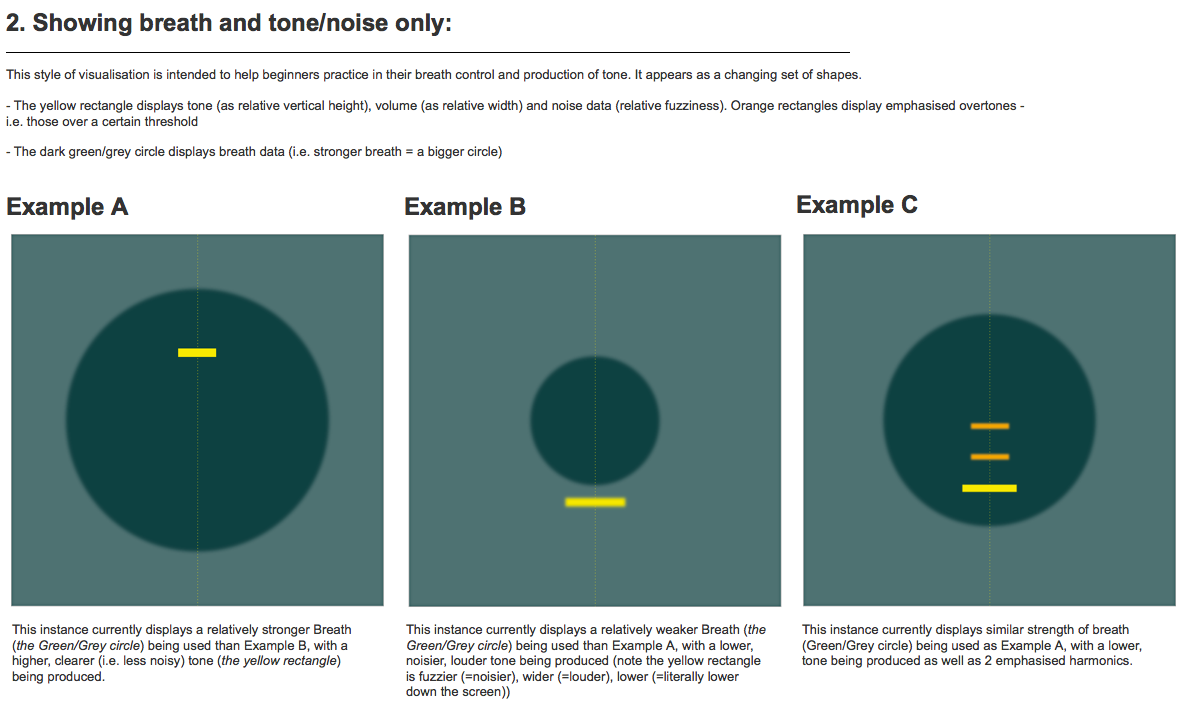

Our original plan was to test 2 versions of the data visualisations - a linear 'timeline' readout that shows how you're playing over time, and a 'non-linear' breath/tone practice visualiser (see out last post for more details).

As it happens, we found that the data (sent via Arduino and converted to OSC) wasn't being sent properly to the Visualiser app (running in OpenFrameworks). This meant we got a 'glitchy' visualisation (which wasn't hugely useful!).

Note how the projected visualisation isn't smooth and is therefore hard to make sense of

Feedback about the instrument itself

However, we were able to get some good feedback about the hardware itself. It seems that the 3D printed Ney was playable and produced a good sound. Some of our musician's feedback included:

- 'it's harder to play the upper register than a reed Ney - usually the lower register is harder to play'

- 'it's not such a rich sound as [a reed Nay]'

It turns out that reed Neys have a constriction near the top (where the first node meets the second). We didn't include this in the printed Ney which is possibly why it was harder to reach the upper register with our 3D printed Ney.

Feedback about the proposition

Using some of the design mockups for an practice 'app', we were also able to get feedback about the UX concepts we've been exploring - namely using the object as an aid for teaching and practice. (The musican we tested with is a professional performer as well as a private tutor).

She felt that the concept of being able to see what you are doing physically, and map that to the sounds you are producing, could be useful. However, she felt this would have limited value to beginners: seeing you were doing something 'wrong' (i.e. producing a noisy tone when you're trying to produce a clean tone) wasn't a substitute for understanding what you need to do to make it better. She felt this was only something you could get from a teacher or your own experience.

This was a great insight to get and could have some bearing on exactly how we envisage this object working as a practice /teaching aid and who it's ultimately aimed at. As an aside she felt there would be secondary uses for the visualisations (as a way of helping to produce tablature etc.

Looking forward to testing again

The questions and concepts mentioned above are things we want to investigate further tomorrow, when we test the prototype again.

The good news is we've been working hard as a team (special mention to Aris and Stef) to get things working. As of this morning the visualisations are working properly! (linear 'timeline' version shown below):

We've got a bit to do - we need to smooth the breath data and raise the contrast of the fingering data (to make them more usable). Stef's also working on the breath/tone visualisation today (mock up shown below and more info here):

We'll let you know how it all goes!